Hands Off Development

Recently, I noticed a big shift in my use of coding agents – I switched from assistants in IDEs to using a console interface. In other words, I now spend more time with Claude Code than with Cursor.

I think this happened because I started feeling more comfortable offloading larger and larger chunks of work to the coding agents themselves. I’m steering them at a higher and higher level and writing less code by hand myself. This revelation coincides with the observation of Simon Willison, who noticed that the models got incrementally better, until the new models (GPT-5.2 and Opus 4.5) reached an inflection point, where suddenly they started being helpful in resolving much harder problems.

That tweet about the inflection point. Source

The 2025 workflow

So, my 2024-2025 coding-with-agents style was mostly about giving direct and detailed instructions on what exactly needs to be done. Most of the time, in my head, I’ve already seen exactly what needs to be implemented. Without agents, I would have to spend the next 30 minutes to a few hours coding my vision. With Cursor, instead of writing the code myself, I gave direct and very precise instructions on what needs to be written and provided enough context. Since I’m quite opinionated about how the code I write has to look and be organized, I also pass Cursor rules to the context that I want the agent to follow.

As coding agents get smarter, I get more comfortable giving more and more tasks to them.

My prompt for these tasks would look like this:

Create a new Django model AccountSettings with a settings field to store account settings in a JSON field. Create a new model in the @users/models.py file. Create a Django model admin to modify settings. Update @users/services.py to create necessary service functions to modify user settings (use @teams/services.py as a role model). Create tests and ensure they pass. See @.cursor/rules/django-models.mdc and @.cursor/rules/django-tests.mdc. Read Linear task PROJECT-123 for more context.

This is a hypothetical prompt, but it’s a typical one. I’ll outline a few things:

- I help the agent with the task by sharing as much context as there is in my head and in the codebase.

- I explicitly share the files where the code has to be modified, similar code that can be used as a role model, and even explicitly include Cursor instructions to follow to ensure they’re not ignored. Drag-and-drop with Cursor makes it easy to populate the context from currently open files.

- With time, I learned that agents don’t require detailed instructions to write simple “derivative code” such as tests or Django ModelAdmin classes. With clear project rules, the agent usually writes them well and requires little or no manual correction.

- With time, I learned not to copy-paste anything that the agent can get by itself. I connected MCP services and made them rely on MCP and command-line tools more. This is where “Read Linear task PROJECT-123 for more context” comes from: the Linear MCP server will help the agent populate its context. I don’t give direct instructions on how to use this context, but I add it to help the agent see “the bigger picture”.

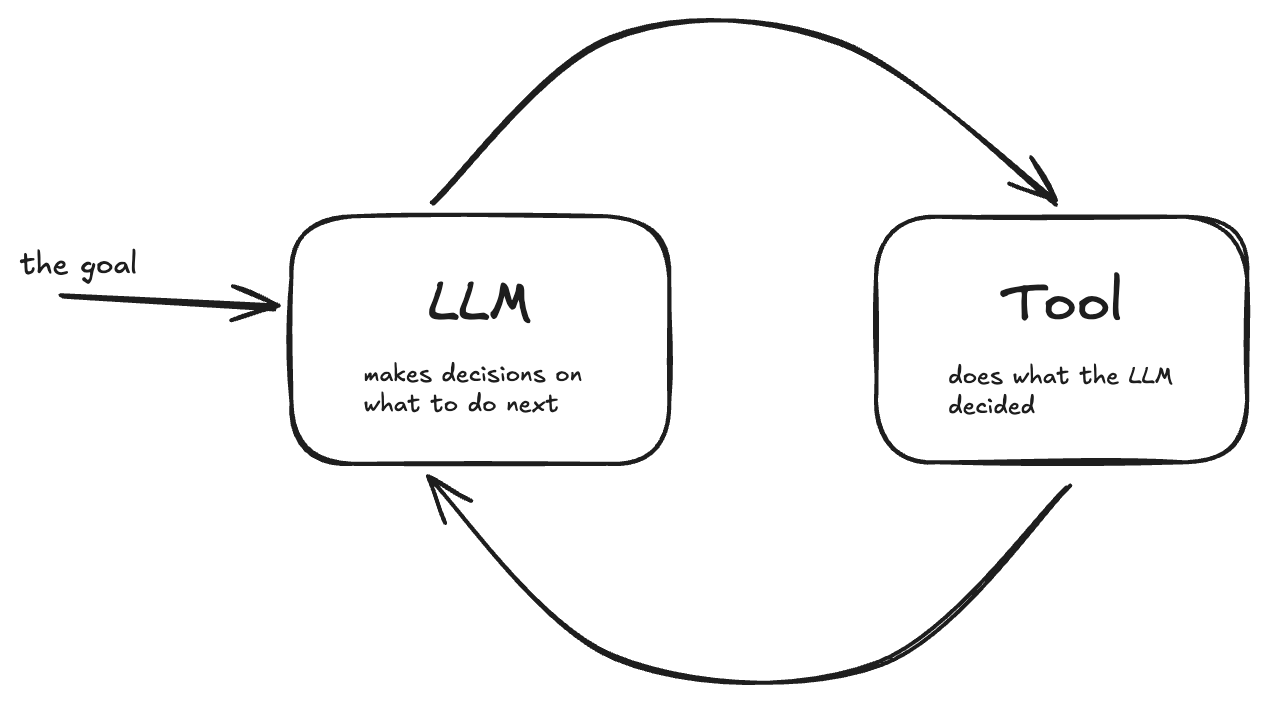

- A big conceptual shift for me was to learn to tell “ensure tests pass” to an agent, effectively turning it from an one-step machine converting input to output to an autonomous agent, creating an infamous agentic loop.

Here is the simplest agentic loop definition, which I borrowed from this blog post about a mental model for Agentic AI applications:

The simplest agentic loop definition. Source

What happens in 2025-2026

Agents got better, as did my understanding of where I can become more hands-off with them.

The two big things that changed what I could do with agents were likely these:

- Understanding that agents, with enough tooling, can gather necessary information themselves. I can trust them with this task. Also, I can assist them by providing enough tooling (MCP servers, CLI scripts, instructions, etc.).

- Understanding the agentic loop better and relying more on it. Let agents work iteratively.

The importance of tooling was emphasized in the Agentic Coding Recommendations blog post from June 2025 by Armin Ronacher, and it stuck with me. The core idea of the message is that you must invest in creating helpful tools that are self-discoverable, reasonably fast to execute, and reliable to run, and that’s what builds the flywheel of a more efficient agentic loop.

Similar to “Read Linear task PROJECT-123 for more context”, I started more actively relying on higher-level and shorter instructions, like “Address the PR feedback”, knowing that the agent is smart enough to run “gh” to get the PR comments and act upon them, or “CI tests failed, please explore what’s wrong and fix them”. The best part is that the agent knows better than I do how to use “gh” to find the latest failed CI task and fetch its logs.

Side note: Yes, I say please to agents quite often. It feels more natural this way.

And all of a sudden, with these changes, I found that I don’t need an IDE to talk to these agents anymore. I use the IDE mostly to populate the agent’s context and to “think high level”. Since agents got smarter, and probably even more importantly, they got faster, I can become more hand-wavy with them, essentially letting them figure out by themselves what needs to be learned.

I heard some hardcore “agent developers” say they don’t open their IDE at all anymore because the console is all they need these days. I’m not in that camp, as I rely on the IDE to make sense of the code. I test and review what agents write, but switching between the IDE and the console UI to give feedback doesn’t bother me because I don’t micromanage.

In 2025, I already had a notion of coding agents being “very diligent newcomers to your organization”. Initially, I used the term “junior developers”, but no, they’re not junior at all—you don’t babysit them. Instead, they’re senior, but it’s like it’s their first day in your organization, so you need to give very detailed instructions on how the task has to be implemented and also feed in as much context as you need.

I’ve heard several complaints from programmers that agents now do all the fun parts of working with code. I disagree with that, at least for myself. If anything, I feel I have even more fun. For me, software development feels even more intellectually challenging and rewarding than before. It’s just because I spend more time thinking about the hard issues, like how to properly architect a solution to a problem, and spend much less time doing monkey coding.

I guess the inflection point that Simon is talking about was due to the gradual increase in intelligence and speed of agents. Eventually, this lets us rely more on them figuring out both the bigger picture and the existing patterns better and faster. They’re still on their first day with your codebase, but they learn the ropes much faster. That’s the shift.